March 5, 2024

By Charlotte Wickham

Desk-based readability assessments are an efficient method of evaluating the readability of your labeling, including your instructions for use (IFU), quick reference guide (QRG), or other user-facing documents (e.g., patient information leaflets). This article discusses the applications of readability assessments and presents an overview of three methods.

Regulatory imperative

In terms of readability, the FDA and MDR provide similar expectations, typically expecting between a sixth to eighth-grade reading level for most labeling with recommendations for even lower levels if the intended users might need it. Some regulators require readability assessments in certain situations. To receive a CLIA Waiver for an in vitro diagnostic (IVD) device, the FDA recommends that your labeling be written at no higher than a seventh-grade reading level. As such, it is beneficial to evaluate the reading level of your labeling before seeking a CLIA Waiver and to include the results of the assessment within your application.

For certain medical devices, such as implants, the EU’s MDR requires that manufacturers develop a summary of safety and clinical performance (SSCP). Where the SSCP will include patient-facing content (e.g., in a section titled “Patient summary”), MDR recommends that user-testing of the SSCP be conducted. However, it ultimately states that the manufacturer may decide the methods by which it determines the readability of the SSCP. As such, a readability assessment can be a way to evaluate and estimate the reading level and enable effective refinements. Below is a review of a few of the most common types of readability assessments. Later, we also discuss their limitations and the importance of also conducting comprehension studies (i.e., more in the style of usability testing).

Methods

Flesch-Kincaid

Overview: The Flesch-Kincaid readability assessment uses word length and sentence length to evaluate the readability of a passage. Flesch-Kincaid tests provide two scores: a reading ease score and a grade reading level. Noting that regulators typically provide their guidance in the form of school grades, the reading grade level is usually the most relevant. Long sentences and words with numerous syllables result in a higher grade level.

As an example, consider the two sentences below.

The clavicle, i.e., the collarbone, is the most commonly broken bone. The collarbone is slender, and its location between the shoulder blade and upper ribcage makes it highly susceptible to injury during sports activities and car accidents.

Flesch-Kincaid reading level: 12.3 (12th grade)

The most common bone that a person breaks is the clavicle (collarbone). The collarbone is slender. It is located between the shoulder blade and upper ribcage. As a result, it can be broken during sports activities or car accidents.

Flesch-Kincaid reading level: 7.0 (7th grade)

These two paragraphs are intended to convey the same meaning, but by breaking up longer sentences into smaller ones and reducing the instances of words with numerous syllables (e.g., susceptible), the Flesh-Kincaid reading level is lowered from a 12th-grade to a 7th-grade level.

Benefits: Flesch-Kincaid is a built-in function of Microsoft Word and is therefore very easy to perform. Microsoft Word allows you to conduct Flesch-Kincaid tests on specific sections of text, allowing you to explore the readability of your text with a high level of granularity.

Limitations: In addition to the general limitations of readability assessments—which will be discussed later—Flesch Kincaid has some specific limitations. Notably, it only considers complete sentences in its evaluations; sentences without full stops will be given scores of 0. Therefore, if portions of your text lack full stops—e.g., bulleted lists, addresses, diagram annotations—these will be ignored, and the readability picture provided by the Flesch-Kincaid test might be incomplete.

SMOG

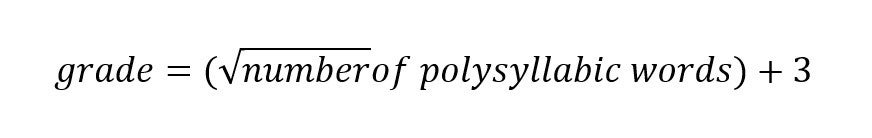

Overview: The SMOG test (which stands for Simple Measure of Gobbledygook) uses a formula that considers the number of polysyllabic words (words with three or more syllables). You only need to count the first instance of a polysyllabic word (i.e., you can ignore repeats). To run a SMOG text, select three ten-sentence-long samples from the text in question and count the number of polysyllabic words. Then, apply the count to the following formula:

The example text below includes less than 30 sentences, and therefore we cannot accurately calculate the SMOG reading level. However, we can expect that the first example would have a higher level based on the increased number of polysyllabic words.

The clavicle, i.e., the collarbone, is the most commonly broken bone. The collarbone is slender, and its location between the shoulder blade and upper ribcage makes it highly susceptible to injury during sports activities and car accidents.

Number of unique polysyllabic words: 8

The most common bone that people break is the clavicle (collarbone). The collarbone is slender. It is located between the shoulder blade and upper ribcage. As a result, it can be broken during sports activities or car accidents.

Number of unique polysyllabic words: 5

Benefits: Some researchers consider SMOG to be more accurate than Flesch-Kincaid (they conclude that Flesch-Kincaid underestimates grade levels) and should be considered the default test for evaluating healthcare materials. Additionally, unlike Flesch-Kincaid, the method is more transparent to those conducting the test; you can easily identify the words driving up your reading level by identifying the polysyllabic words. The simple formula can be built into a Microsoft Excel spreadsheet or similar tool.

Limitations: There are SMOG calculators available online, but performing it manually is the best way to guarantee the accuracy of the results. Therefore, evaluating large amounts of text can be time-intensive. You can consider evaluating samples of text, but the accuracy of the result will then depend on the uniformity of your text. An accurate SMOG test requires at least 30 sentences and is therefore not suitable for shorter passages. Like Flesch-Kincaid, it can also not be used to accurately determine the readability of text that does not comprise of full sentences.

Fry readability formula

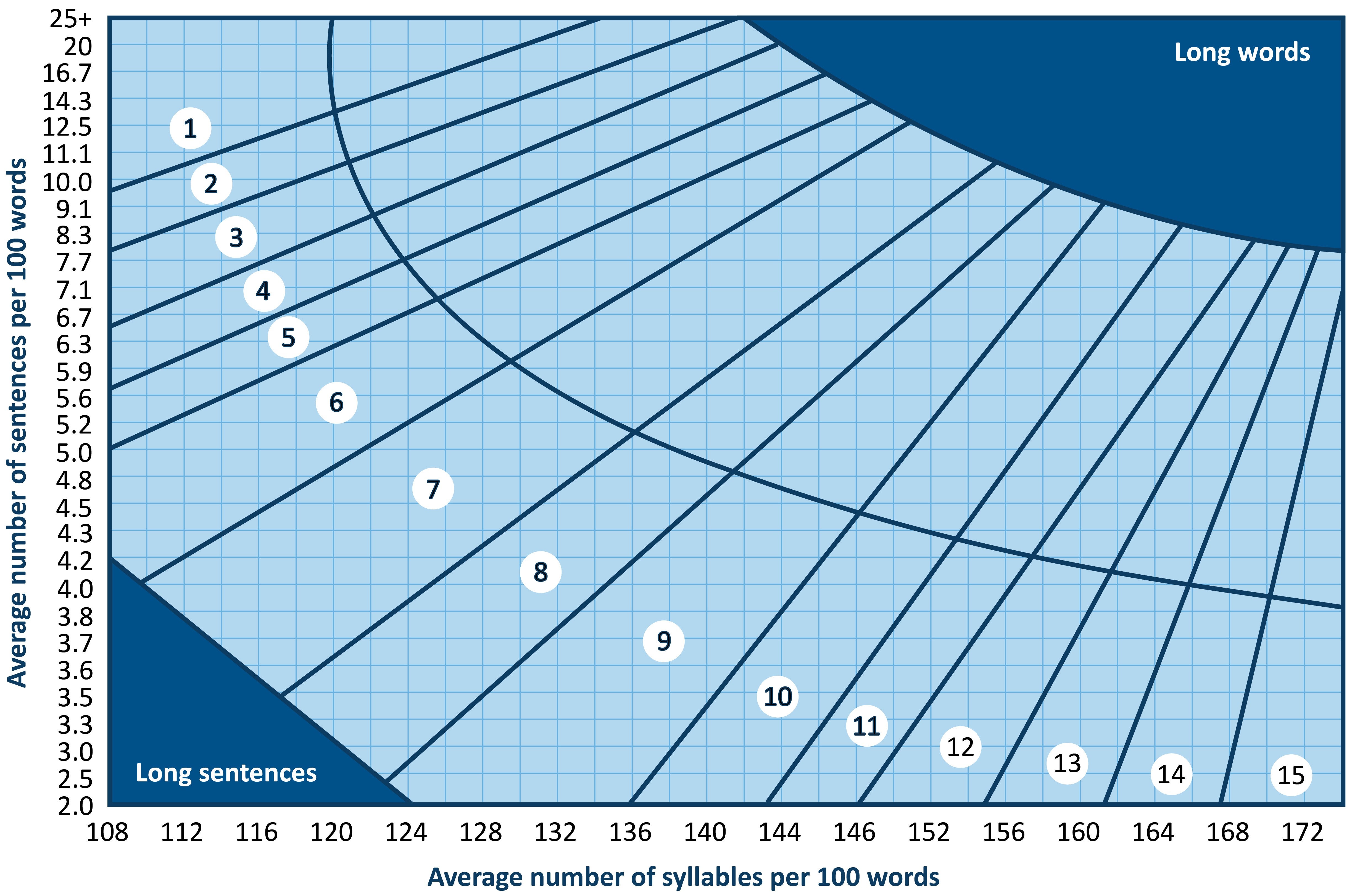

The Fry readability works by taking three 100-word passages and plotting two values—the average number of sentences and the average number of syllables—on the graph shown below. The area in which the resulting plot resides signifies the approximate reading level.

Similar to Flesch-Kincaid, higher reading levels are caused by longer sentences and words with numerous syllables.

Benefits: The Fry readability assessment provides a bit more granularity than SMOG, enabling you to gain a better understanding of the impact of both the length of sentences and the number of complex (i.e., multi-syllabic) words being used. The method of grade calculation is transparent. Having plotted your score on a graph, you can identify which values would need to be lowered (i.e., syllables or sentences) and how much they should be reduced to lower the approximate grade level of your text.

Limitations: While online calculators are available, performing the test manually is the best way to guarantee accuracy. Evaluating large amounts of text can be time-intensive. Fry uses three 100-word passages, and it is recommended to take this from the beginning, middle and end of the text. Depending on the length and uniformity of your text, you might need to divide your text into sections to gain the most accurate result.

Readability assessment uses and limitations

Readability assessments are useful for efficiently evaluating your text. Compared to readability assessments with actual participants—often called comprehension studies—they are inexpensive and quick to perform. However, the lack of actual users involved does limit how useful they are. Notably, readability assessments deal with numbers – none of those presented in this article consider context, word popularity, or the nuances associated with particular groups of readers. For example, some longer words might be more common than their shorter synonyms (e.g., annoying versus vexing). The familiarity of language used is key to a text’s ease of comprehension, and it is best evaluated with actual users.

Readability assessments are a good initial step to take when evaluating your text. They can help you identify areas of your text that use complex words and long sentences and might therefore induce confusion or fatigue in users. By aiming to reduce the grade level of your text and using readability assessments as a measure, you can reduce the complexity of your text with an iterative approach of drafting, evaluating, re-drafting, and evaluating. Then, having evaluated and optimized your text according to mathematical measures (syllable counts, sentence length), you can then evaluate your text for the familiarity of its language and clarity of its meaning—which, of course, is best evaluated with the intended readers of the text.

Conclusion

Readability assessments are a useful first step when it comes to evaluating your text. However, each method has its limitations, and ideally, readability assessments should be supplemented by comprehension studies with actual users.

Charlotte Wickham is Senior Human Factors Specialist at Emergo by UL's Human Factors Research & Design division.