August 10, 2023

The proliferation of artificial intelligence (AI) across multiple industries including medical device manufacturing offers significant opportunities and innovations, but also introduces potential new risks. Regulatory approaches to AI for the most part have yet to take shape as governments seek to better understand the new technology and the risks it poses.

As AI becomes more widespread, the European Commission (EC) has proposed new legislation, the Artificial Intelligence Act, to establish regulatory pathways for products and technologies utilizing AI components. In drafting the AI Act, the EC has prioritized the creation of the “…world’s first set of comprehensive rules to manage the opportunities and threats of AI,” and according to Explanatory Memorandum, 1.1 , “to support socially and environmentally beneficial outcomes and provide key competitive advantages to companies and the European economy.”

“High-risk AI systems should bear the CE marking to indicate their conformity with this Regulation so that they can move freely within the internal market,” Recital 67 of the draft AI Act reads. As we’ve written before, the majority of EU legislation is promulgated as regulations.

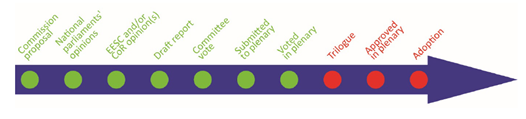

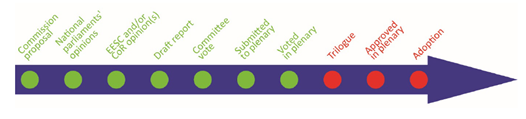

In 2018, after the adoption of the Medical Devices Regulation (EU) 2017/745 (MDR) and In vitro Diagnostic Medical Devices Regulation (EU) 2017/746 (IVDR), the first press releases on the AI legislation in the EU were published. In June 2023, the negotiations between the European Parliament and the European Council on the final form of the law started, and they approved the draft AI Act.

Foundations of the AI Act

The new AI Act is part of the New Legislative Framework (NLF), just like the MDR and IVDR. The NLF comprises a portfolio of legislations structured with the same regulatory and compliance requirements: products need to comply with applicable “essential” requirements delineated in the legislation, generally with standards and different conformity assessment routes, possibly requiring the use of Notified Bodies, and then affixing the CE marking..

The AI Act has a risk-based approach and defines mandatory requirements applicable to the design and development of AI systems, and furthermore regulates the way market surveillance is conducted. The draft legislation also describes conformity assessment routes that may involve Notified Bodies. It’s important to note that the AI Act would apply in addition to other relevant EU legislation—for example, a medical device with AI features in its electric components would at minimum need to comply with the MDR, the Restriction of Hazardous Substances Directive (RoHS) and the AI Act.

In addition, certain AI systems and foundation models would need to be registered in an EU database (separate from EUDAMED), to be established and managed by the EC. That database should enhance transparency and should be freely and publicly accessible.

AI Act exclusions

The AI Act excludes certain products (Article 2): AI systems developed or used exclusively for military purposes, or authorities who use AI systems in the framework of international agreements for law enforcement and judicial cooperation. Also, certain AI systems are prohibited (Article 5).

Annexes of the AI Act

The AI Act contains three Annexes. Annex I describes the “Artificial Intelligence Techniques and Approaches.” All techniques and approaches listed in Annex I are subject to the AI Act.

AI products meeting the requirements listed in Article 6 of the AI Act are identified as “high-risk” products. This is the case if an AI system is (cumulative):

- Intended to be used as a safety component of a product, or is itself an AI product, covered by Union harmonization legislation (such as all NLF legislations), listed in Annex II of the AI Act;

- And, the product (a) is required to undergo a third-party conformity assessment based on the legislation listed in Annex II of the AI Act.

Additionally, all AI products listed in Annex III of the AI Act are considered high-risk.

As indicated above, the MDR and IVDR are NLF legislations, and as such, medical devices which require Notified Body involvement according to the MDR or IVDR fall automatically into the high-risk AI category. This would imply that a Notified Body would need to conduct the AI conformity assessment, as well as the MDR or IVDR assessment.

High-risk AI systems and the complications in the AI Act

High-risk AIs need to comply with the requirements listed in Chapter II of the AI Act, and this is where it gets more complex.

For one thing, there are inconsistencies between the terms and definitions used in the AI Act and those used in the MDR and IVDR. The same terms are used, but the definitions are not the same.

Second, some of the requirements of the AI Act for high-risk devices are contradictory to requirements from other EU legislations. For example, the requirements listed in Article 10 (“Data and data governance”) and Article 12 (“Record-keeping”) are extremely difficult (if not impossible) to meet for manufacturers of medical devices. Manufacturers are per definition not allowed to keep patient-specific data on file, as this would be an infringement of privacy legislation such as the General Data Protection Regulation (Regulation (EU) 2016/679, GDPR). Nor do device manufacturers have visibility into patient data used during clinical studies to demonstrate clinical benefits, safety and effectiveness. Nevertheless, the manufacturer is required to be able to identify the natural persons involved in the verification results.

In addition, the AI Act requires that “training, validation and testing data sets shall be relevant, representative, free of errors and complete.” However, device manufacturers must also comply with the GDPR, and in this context they would find it impossible to verify the accuracy and completeness of data that they are prohibited from accessing by the GDPR as well as member states’ healthcare laws.

Similar challenges are raised in terms of clinical investigations and AI Act requirements. Establishing “complete” clinical data sets for devices targeting rare diseases, for example, can take many years. Any such devices with AI components could not be placed on the EU market until such data could be obtained and verified, according to the AI Act in its current draft form, potentially limiting treatment options for patients.

Concluding remarks

We can go on and on regarding the challenges that still need to be tackled. If the current version of the AI Act is adopted, this would lead to legal uncertainties for medical device manufacturers because of the contradictions in the terms and definitions between the AI Act and those of the MDR and IVDR. Closer alignment between the AI Act, the GDPR and other data-related legislations must also be considered.

Nevertheless, we also need to realize that time is ticking. EU lawmakers have commenced the negotiations to finalize the new legislation. The first trilogue took place in June 2023 and the second trilogue took place in July.

Emergo by UL will monitor the developments regarding the AI Act, and report on how the medical device and IVD sectors will be impacted.

Request more information from our specialists

Thanks for your interest in our products and services. Let's collect some information so we can connect you with the right person.